(Images made by author with Leonardo ai )

While general purpose large language models (LLMs) like GPT-3 are incredibly versatile, their performance on domain-specific tasks is often less impressive. This limitation has led to the development of domain-specific language models that are tailored for specific fields like medicine and science. In finance, BloombergGPT emerges as a leading solution with its novel approach that blends the advantages of general models with the precision of domain-specific ones to meet the complexity and unique needs of this field.

Drawing from Bloomberg’s research paper released on March 30, 2023, this blog post will delve into the development and performance of BloombergGPT and its significance for the finance industry.

Table of Contents

- What is BloombergGPT?

- Architecture

- Datasets

- Evaluation on Various Benchmarks

- Broader implications

- Conclusion

- References

- Additional Information

What is BloombergGPT?

On March 30, 2023, Bloomberg released a research paper introducing BloombergGPT , a 50-billion parameter LLM focused on finance. The model was trained on a massive dataset that includes a large amount of financial data, making it well-suited for financial tasks.

Applications of BloombergGPT in the finance industry include:

- Sentiment analysis to identify the sentiment of financial news articles and other text.

- Named entity recognition to identify and extract named entities from financial text, such as company names, ticker symbols, and product names.

- Question answering for financial data inquiries.

- Text generation for financial content, including summaries, headlines, captions etc.

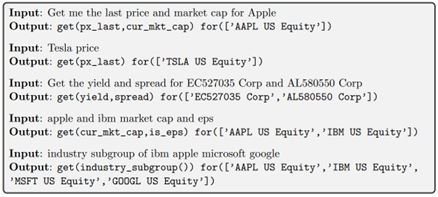

Unlike popular chatbots like Bard or ChatGPT, BloombergGPT is not a standalone service. Instead, it is integrated into Bloomberg Terminal (BT) a subscription-based platform that offers access to an extensive array of financial data, analytical tools and more. This integration allows Bloomberg’s customers to utilize natural language queries with BloombergGPT to access BT’s financial data and tools, simplifying the process compared to using complex Bloomberg Query Language (BQL) commands, as illustrated below. BQL is a specialized query language that Bloomberg developed to access and analyze financial data on the Bloomberg platform.

Source: BloombergGPT: A Large Language Model for Finance, Figure 4

Architecture

BloombergGPT is a decoder-only causal language model built on BLOOM, a large open-source language model developed through a collaboration between academia and industry to advance natural language processing and promote responsible AI development.

The “decoder-only causal” nature means that it generates text word by word, predicting each word based on the previous context. BloombergGPT has a complex architecture of 70 transformer decoder blocks, a neural network architecture specifically designed for natural language processing tasks such as machine translation and text generation. In simpler terms, BloombergGPT can generate text and answer questions thanks to its sophisticated design.

Datasets

To develop an AI model that understands both general and finance-specific language, the researchers take an innovative approach. Unlike other domain-specific models that use only specific data or adapt general models, they train BloombergGPT on both finance-specific and general data sources. This approach makes BloombergGPT specialized and versatile.

In order to train BloombergGPT, researchers used a portion of a dataset of over 700 billion tokens. This dataset consists of two main components. FinPile, a dataset of 363 billion tokens developed by Bloomberg, focuses exclusively on English financial materials like news articles and regulatory filings. The second dataset of 345 billion tokens incorporates content from Wikipedia, C4, and The Pile, which are multi-domain public datasets commonly used in the training of other LLMs. These datasets collectively enable the researchers to create a model proficient in both finance and general language.

Evaluation on Various Benchmarks

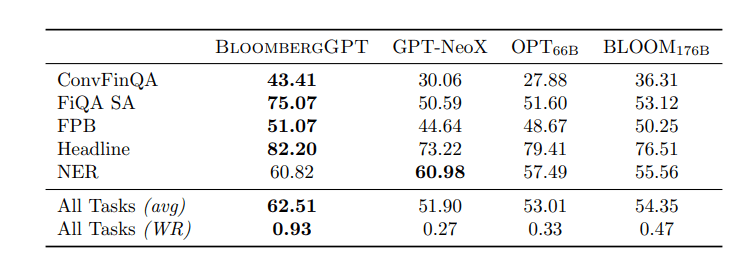

BloombergGPT underwent validation across multiple benchmark sets, including standard LLM, open financial, and Bloomberg’s internal benchmarks aligned with its intended use cases. The results emphasized the effectiveness of the mixed training approach, resulting in superior performance in financial tasks compared to existing models, while maintaining competitiveness in general NLP benchmarks. For example, the table below provides a comparison of BloombergGPT’s performance with other LLMs across five finance-related tasks. These results emphasize BloombergGPT’s specialized proficiency within the financial domain.

Source: BloombergGPT: A Large Language Model for Finance, Table 8

Broader implications

The BloombergGPT paper contributes to broader research topics through its innovative approach toward domain-specific LLM, setting a precedent. Additionally, the paper offers valuable insights for the research community, covering its unique training data, model size, tokenization strategy, and more.

However, while recognizing the value of openness and collaboration with researchers, the paper also underscores certain challenges to releasing the model. Notably, FinPile, an exclusive extensive financial dataset built by Bloomberg, plays a crucial role in enhancing BloombergGPT’s performance in finance-related tasks. Additionally, BloombergGPT is also trained on a great amount of publicly available data. To mitigate concerns related to data leakage attacks and the potential for misuse through imitation, Bloomberg has opted not to release the model to the broader research community.

Conclusion

BloombergGPT is a major advancement in the field of LLMs, especially for domain-specific applications like finance. It outperforms other LLMs on financial benchmarks while maintaining competitive performance on general-purpose LLM benchmarks. This positions BloombergGPT as a valuable asset for the financial sector, while simultaneously enhancing the user experience for Bloomberg’s customers. Additionally, this achievement is likely to motivate companies specializing in financial data, which manage extensive data repositories to develop their own finance-centric language models, benefiting financial professionals and the broader investing community.

References

BloombergGPT: A Large Language Model for Finance, Shijie Wu, Ozan Irsoy, Steven Lu, et al., March 30, 2023

Bloomberg plans to integrate GPT-style A.I. into its terminal, Kif Leswing, CNBC, April 13, 2023

Introducing BloombergGPT, Bloomberg’s 50-billion parameter large language model, purpose-built from scratch for finance, Bloomberg, March 30, 2023

Additional Information

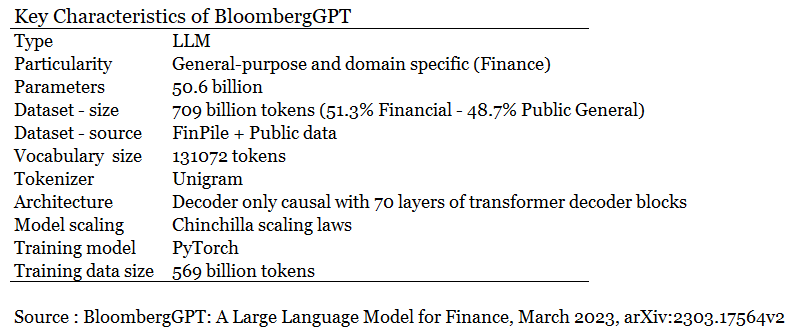

BloombergGPT’s key Characteristics

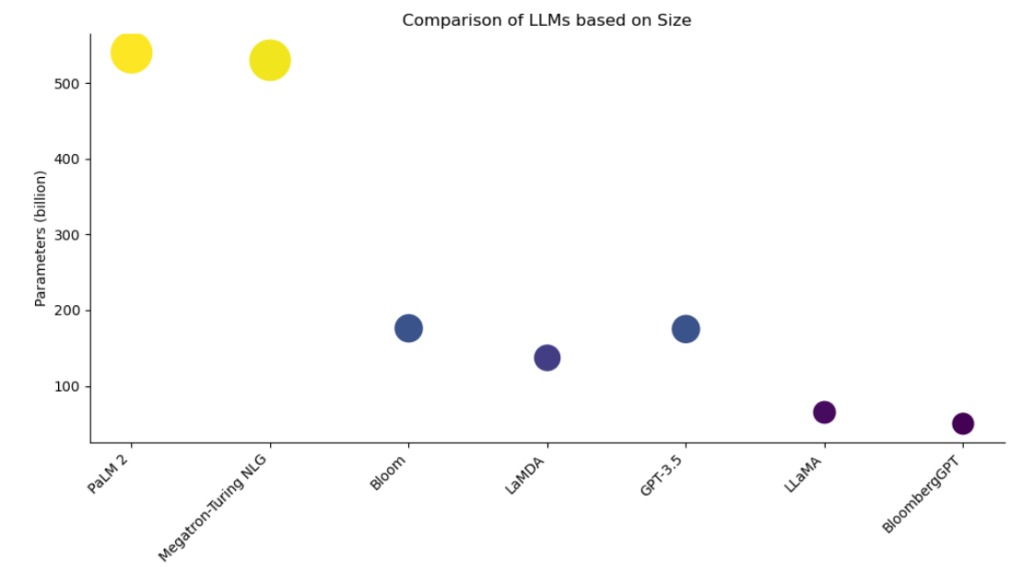

BloombergGPT compared to other popular LLMs

Leave a comment