(Images made by author with MS Bing Image Creator)

One of the challenges of developing artificial intelligence (AI) systems is to ensure that they behave as intended by humans. However, relying on human feedback alone can be costly, time-consuming and impractical for large-scale models. Anthropic, an AI safety and research company, has proposed a new approach called Constitutional AI (CAI), which uses AI feedback to assess and guide model outputs. In this post, we will explain what CAI is, how it differs from conventional AI methods and how it can help create AI systems that are more aligned with human values and less likely to cause harm.

Table of Contents

What is CAI ?

Constitutional AI is a methodology developed by Anthropic for training AI systems to be helpful, honest and harmless. It does this by adhering to a predefined set of principles or rules, referred to as a “constitution.” These principles embody human values, ethics and desired behavior. One of the key features of CAI is that it enables AI systems to self-improve their adherence to these principles, with minimal reliance on human feedback.

The key components of CAI are as follows:

Principles-Based Guidance: CAI relies on a constitution that outlines the rules or principles the AI system should follow. This constitution serves as the foundation for decision-making and behavior.

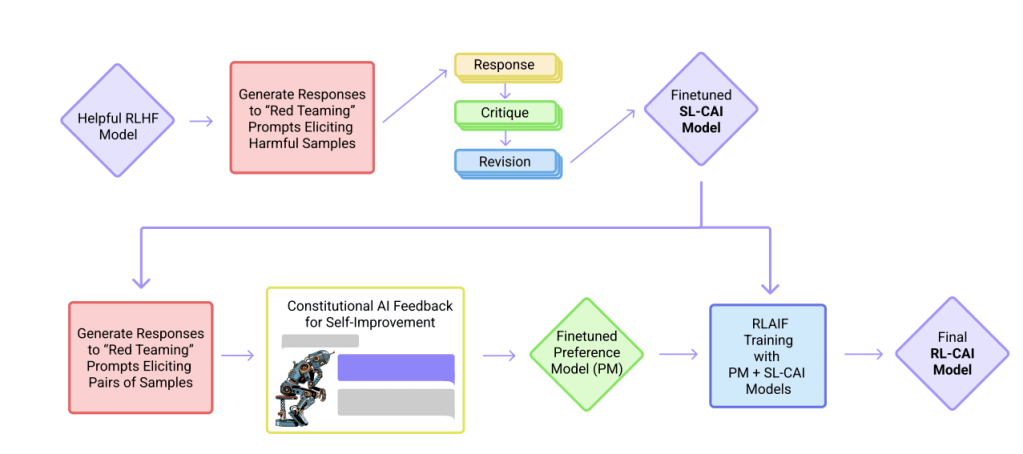

Supervised Learning and Self-Critique: The training process involves an initial supervised learning phase in which the AI system generates responses to various challenging prompts, critiques its own responses based on the constitutional principles and suggests revisions that better align with the constitution. The revised responses are used to fine-tune the AI system to improve its adherence to the constitution.

Reinforcement Learning from AI Feedback: In the second phase of the training process, a technique called reinforcement learning from AI feedback (RLAIF) is used, where the refined model’s outputs are evaluated by another AI model (the preference model) for their compliance with the constitution. The feedback from the preference model is used as a reward signal in the reinforcement learning process, ensuring that the refined model continually improves its performance in alignment with the constitutional principles.

This process differs from Reinforcement Learning from Human Feedback (RLHF), where the AI model learns from a preference model that predicts the reward signal using human feedback rather than evaluating the model’s adherence to a constitution.

Source : Constitutional AI: Harmless from AI Feedback, Figure 1

Benefits of CAI

CAI offers a number of benefits over traditional AI training approaches:

Reduced reliance on human feedback: Through a combination of a constitution and Reinforcement Learning from AI Feedback (RLAIF), CAI models can learn and self-improve. This approach reduces the need for extensive human supervision, which can be costly and time-consuming. Furthermore, it allows for increased consistency in responses, thereby helping to mitigate potential biases and offering greater scalability compared to traditional models that rely heavily on human feedback.

Enhanced transparency and accountability: CAI models can explain their outputs and decisions by referencing the constitutional principles that they follow. This makes their reasoning clear and explicit, which can foster understanding and trust, while also facilitating error detection and correction.

Greater adaptability: CAI models are adaptable to changing circumstances and preferences, as the constitutional principles that they follow can be updated accordingly. This makes them well-suited for deployment in a wide range of contexts and allows them to evolve alongside changing human values and ethical norms.

Limitations of CAI

Although CAI offers many advantages, it’s important to be aware of its limitations, including:

Complexity of Constitution Design: Designing a comprehensive and accurate constitution for CAI systems that encapsulates intricate human values and ethics is a daunting task. The complexity of defining and implementing such a constitution can present significant challenges. Moreover, even with a well-crafted constitution, there may be limitations in covering every potential scenario and context that the AI system might encounter. This could lead to undesirable outcomes, necessitating human intervention or adjustments to the constitution.

Resource Requirements: The development and maintenance of CAI systems can demand considerable resources, including expert input, continuous updates and computational power, which could be a barrier for some organizations.

Limitations of Language Models: Constitutional AI (CAI) assumes that language models can correctly interpret and apply the principles of the constitution to critique and revise their own responses through chain-of-thought reasoning during the training process. However, language models may have limitations or biases in their natural language understanding and generation abilities, which could affect their performance and reliability in adhering to the principles of the constitution.

Real-World Applications of CAI

Now that we have outlined the main advantages and limitations of Constitutional AI, we will illustrate in this section how CAI can be used in different domains and tasks with some examples.

Developing safe and ethical AI chatbots: CAI can be used to train AI chatbots that are capable of engaging in natural and informative conversations with humans, while avoiding harmful or inappropriate responses. One example of such a chatbot is Anthropic’s Claude, which uses CAI to guide and evaluate its behavior.

Guiding AI systems in sensitive domains: CAI can be used to guide AI systems in sensitive domains, such as healthcare, finance and criminal justice, to ensure that their decisions are aligned with human values and ethical considerations. As an example, in the field of finance, CAI could be used to guide AI systems to make lending decisions that are fair and transparent while avoiding discrimination.

Developing AI tools for social good: CAI can be leveraged to create AI solutions aimed at addressing societal challenges such as climate change, poverty and inequality. For instance, in the context of climate change, CAI could ensure that AI tools align with international climate agreements and other relevant policies.

Conclusion

CAI offers a promising methodology for building AI systems that are reliable and transparent while requiring minimal human supervision. By leveraging a set of guiding principles, CAI can help to ensure that AI systems are safe, ethical and aligned with human values. As CAI research and development continues to advance, we can expect to see this innovative method play an increasingly important role in shaping the future of AI.

Additional Resources and References

Constitutional AI: Harmless from AI Feedback, Yuntao Bai, Jared Kaplan et al., Anthropic, December 15, 2022

Claude’s Constitution, Anthropic, May 9, 2023

What Is Reinforcement Learning from Human Feedback (RLHF) and How Does It Work?, Manuel Herranz, pangeanic.com, September 22, 2023

RLHF vs RLAIF for language model alignment, Ryan O’Connor, AssemblyAI, August 22, 2023

Leave a comment