(Images made by author with MS Bing Image Creator)

As AI research delves into building smaller, more efficient language models, a key challenge emerges: to equip them with the reasoning and comprehension abilities of their larger counterparts. While learning from powerful “instructor” models like GPT-4 offers significant benefits, “student” models often fall short when faced with complex tasks that require advanced reasoning. This gap highlights the need for innovative methods to bridge the reasoning divide between smaller and larger language models.

This blog post explores Microsoft researchers’ efforts to address this challenge with Orca 2. This latest research model demonstrates that a combination of tailored synthetic data and optimized training methods can allow small language models (SLMs) to achieve the performance of large language models (LLMs) in complex reasoning tasks, and open up exciting possibilities for more versatile and efficient language processing solutions.

Table of Contents

What are SLMs ?

Small language models (SLMs) are making significant waves in the field of language processing, especially where efficiency and adaptability are of paramount importance. Unlike large language models (LLMs) like GPT-4 with their vast parameter count (hundreds of billions), SLMs operate with significantly fewer parameters, typically around 13-10 billion or less. This translates to significantly lower computational power requirements, making them ideal for resource-constrained environments like edge devices and low-power mobile applications. Despite their size, SLMs demonstrate remarkable versatility, tackling diverse tasks such as generating human-quality text, translation, summarization, and question answering.

SLMs exhibit their strengths in different areas:

- Efficiency: SLMs excel in real-time language processing tasks in resource-limited environments, enabling applications like voice assistants and on-device translation.

- Prototyping: Unlike their larger counterparts, SLMs can be trained and deployed quickly and easily, significantly accelerating development cycles and fostering rapid prototyping and experimentation.

- Accessibility: By lowering the cost and infrastructure requirements associated with AI technology, SLMs democratize access and encourage wider adoption and innovation, making advanced language processing more accessible to a broader range of users and developers.

- Responsible AI: SLMs’ lower power consumption translates to a reduced environmental footprint, aligning with the principles of responsible AI.

- explainability: Unlike the “black box” nature of many LLMs, SLMs offer greater transparency and explainability in their decision-making process, making them ideal for tasks requiring trust and understanding.

Despite these benefits, SLMs are not without limitations. For instance, their smaller size can lead to a lower capacity for knowledge retention and difficulty with tasks requiring in-depth understanding or complex reasoning. However, recent advancements are beginning to address these challenges, pushing the boundaries of SLM capabilities. One such example is Orca 2, a SLM equipped with enhanced reasoning abilities.

Inside Orca 2: Capabilities and Limitations

Orca 2 is the latest iteration in Microsoft’s Orca research project, which explores innovative technologies to create, enhance, and specialize small language models. Built upon Meta’s Llama 2 (7B and 13B parameters), this pair of small language models, with 7 billion and 13 billion parameters, outperforms much larger ones in numerous complex reasoning tasks. Orca 2 achieves this performance through specialized training methods that teach it diverse reasoning techniques and solution strategies tailored to individual tasks.

Training : Orca 2 was initialized from the pre-trained parameters of its base model, Llama 2 (7B & 13B parameters). Its capabilities were then progressively improved through additional training data from the FLAN-v2 (tasks dataset), Orca 1, and Orca 2 synthetic datasets. The Orca 2 dataset consists of approximately 817 thousand training instances, i.e. tasks and their corresponding outputs, constructed from four sources, covering zero-shots and few-shots data, math problems and synthetically generated doctor-patient dialogue. This progressive learning approach allowed Orca 2 to achieve state-of-the-art performance across a range of tasks, including reasoning, language comprehension, and math problem solving.

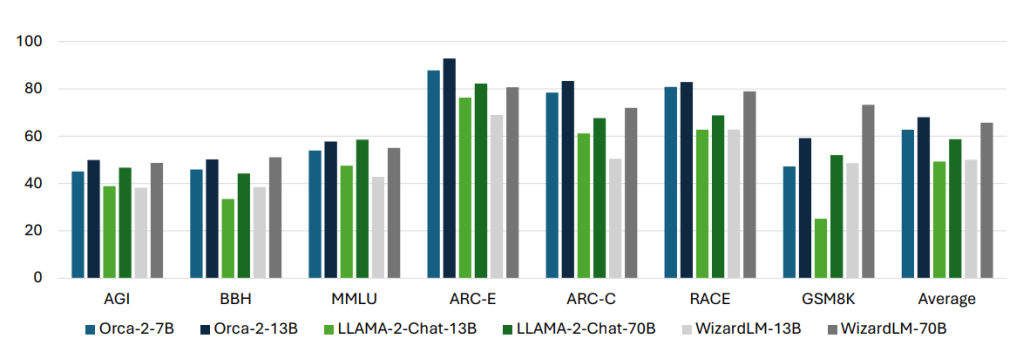

Performance: Orca 2 is evaluated with 15 diverse benchmarks assessing its knowledge, language understanding, reasoning, conversation, safety, and text completion. This analysis compares Orca 2 to other models across various areas. Notably, Orca 2 outperforms models of similar size and even matches or surpasses models 5-10 times larger on complex reasoning tasks, particularly in zero-shot settings, where it relies solely on its general knowledge and reasoning skills to solve new problems.

Source : Orca-2: Teaching Small Language Models How to Reason.

Limitations : Despite its capabilities, Orca 2 is not without limitations inherent to language models. For example, Orca 2 inherits several limitations from its base model, Llama 2. Like other LMs, Llama 2 is susceptible to data biases, potentially leading to unrealistic or inaccurate outputs. Additionally, the implementation of safety guardrails during the generation of synthetic training data may be incomplete, necessitating further study to comprehensively assess potential risks. Furthermore, Orca 2’s compact size limits its capacity for significant knowledge expansion during training. This limits its performance on complex tasks requiring a broad understanding across diverse domains, as the model prioritizes refining its reasoning abilities over acquiring vast knowledge.

Conclusion

Microsoft’s Orca 2 project significantly impacts the rapidly evolving landscape of language models. By leveraging innovative training methods and high-quality synthetic data, Orca 2 achieves reasoning abilities on par with its larger counterparts. While limitations exist and its primary purpose lies within the research domain, Orca 2 paves the way for a new generation of AI solutions – accessible, efficient, and capable – pushing the boundaries of what SLMs can achieve.

References

Ahmed Awadallah, et al.,”Orca 2: Teaching Small Language Models How to Reason“. Microsoft Research Blog, November 20, 2023.

Arindam Mitra, et al. “Orca-2: Teaching Small Language Models How to Reason“. arXiv, November 2023.

Orca, Redefining small LMs performance. Microsoft | Research

Leave a comment