(Images made by author with MS Bing Image Creator)

While artificial intelligence (AI) holds the promise of transformative advancements, its vulnerability to malicious exploitation remains a pressing concern. Adversarial attacks, aimed at compromising AI systems, jeopardize their security and reliability. This post explores attack techniques and strategies to fortify AI resilience against these threats.

Table of Contents

Understanding Adversarial Motivations and Tactics

Machine Learning (ML) systems have become attractive targets for malicious actors seeking financial gain, access to sensitive data, or to inflict harm on individuals or organizations. To effectively defend against these threats, it’s essential to understand the motivations behind them and develop appropriate countermeasures.

Adversarial attacks come in various forms, each tailored to the specific goals and targets of the attacker. Common types include:

Data poisoning occurs when an adversary introduces harmful data into an AI model’s training set, causing it to learn incorrect or biased patterns. This can undermine the effectiveness and reliability of the ML model.

The following illustration demonstrates how adding a subtle layer of noise to an original image can cause a ML model to misclassify a panda as a gibbon, despite the differences between the two images being imperceptible to the human eye. An attacker can inject such subtly altered images into the training dataset with the intention of corrupting the model’s learning process and compromising its accuracy.

Source : OpenAI, Adapted from Ian J. Goodfellow & al, “Explaining and Harnessing Adversarial Examples”.

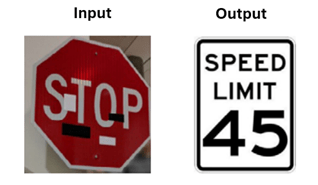

Input Manipulation : This type of attack involves altering the input data of a deployed AI system to produce incorrect or misleading outputs. For example, an input manipulation attack could cause the image recognition system of a self-driving car to misread a stop sign as a speed limit sign, as illustrated below. Similarly, in a voice assistant, an attacker could manipulate input data to make the assistant misunderstand a command.

Small changes added to a “Stop” sign trick the ML algorithm into misinterpreting it as a “Speed Limit 45” sign (source : Alexey Rubtsov, Global Risk Institute)

Extraction attacks, including data extraction and model inversion attacks, involve malicious actors using various techniques to extract sensitive information from ML models or the data used to train them. In a paper focusing on extractable memorization, published in November 2023, the authors demonstrated how adversaries could efficiently extract training data from language models by making specific types of queries, even without prior knowledge of the training dataset.

Understanding the Cost of Adversarial Attacks

Adversarial attacks can have serious and wide-ranging consequences, including operational disruptions, financial losses, and damage to an organization’s reputation. Some other significant costs associated with these attacks include:

Privacy: Adversarial attacks could violate the privacy of individuals or organizations by extracting sensitive information from ML models or the data used to train them. For example, attackers could use extraction attacks to steal sensitive personal information, leading to violations of privacy regulations and loss of trust from customers or clients.

Intellectual property rights: Adversarial attacks may infringe on the intellectual property rights of individuals or organizations by stealing trade secrets or proprietary algorithms. For example, attackers could use model extraction attacks to clone or reverse-engineer ML models without authorization or payment. This could potentially result in lost revenue, unfair competition, and legal issues regarding the ownership and liability of ML models for the victim organizations.

Integrity of ML systems: Adversarial attacks could reduce the integrity of ML systems by degrading their performance, functionality, or reliability. For example, attackers could use poisoning or evasion attacks to make ML models produce incorrect or biased predictions or outputs, which could have serious implications in contexts where these models are used to inform important decisions, such as in healthcare, finance, or criminal justice.

These examples underscore the importance of taking a proactive approach to mitigating the risks of adversarial attacks and protecting against their potential consequences.

Defensive Strategies for Enhancing AI Resilience Against Adversarial Attacks

In response to the growing threat of adversarial attacks ML experts are actively developing and deploying AI systems with enhanced robustness and resilience against such threats. The following examples illustrate how this goal is being accomplished:

Adversarial Training: This technique involves exposing AI models to adversarial examples during the training process, helping them build resistance to future attacks. However, this approach has limitations, as it can be resource-intensive, affect the model’s accuracy, and may not always be effective against all types of attacks.

Data Cleaning and Validation: This method involves carefully reviewing and cleansing the training data to remove any potentially malicious or corrupt inputs. Although this can help reduce the risk of data poisoning, it can be a challenging and time-consuming process, and may not guarantee complete protection against all types of attacks.

Ensemble methods: This approach combines multiple AI models to create a more robust system that can better defend against adversarial attacks. While this strategy can improve resilience, it’s important to carefully evaluate its effectiveness and monitor for ongoing security risks.

While these mitigation strategies are helpful, addressing adversarial attacks is an ongoing challenge. It requires continuous innovation in developing explainable AI models, collaboration between humans and AI, and international cooperation to establish shared safety and ethical standards.

Conclusion

Adversarial attacks pose a serious threat to the reliability and effectiveness of machine learning systems, but there are strategies that can be used to defend against them. Building robust AI systems through techniques such as adversarial training, data cleaning and validation, and ensemble methods can help to mitigate the impact of these attacks and increase the resilience of ML models. In addition, in the ever-changing field of AI, ongoing research, human-AI collaboration and international cooperation are essential to stay ahead of emerging threats and advance AI security. By identifying potential vulnerabilities and taking proactive steps to address them, we can help ensure that ML systems are used safely and effectively, maximizing their benefits and minimizing their risks.

Additional Resources

Florian Tramèr, et al., “Truth Serum: Poisoning Machine Learning Models to Reveal Their Secrets”, arXiv, October 2022.

Thilo Strauss, et al., “Ensemble Methods as a Defense to Adversarial Perturbations Against Deep Neural Networks”. arXiv, September 2017.

Jai Vijayan. Simple Hacking Technique Can Extract ChatGPT Training Data. Dark Reading, December 1, 2023.

Jordan Pearson. ChatGPT Can Reveal Personal Information From Real People, Google Researchers Show. Vice, November 29, 2023.

Note: This post was researched and written with the assistance of various AI-based tools.

Leave a comment