(Images created with the assistance of AI image generation tools)

INTRO TEXT

Table of Contents

There is a striking irony in today’s technology landscape: the very people building the most advanced artificial intelligence are also among the loudest voices warning of its potential dangers. Industry leaders such as Sundar Pichai, Demis Hassabis, Sam Altman, Dario Amodei, and Mustafa Suleyman—key architects of our AI future—are calling for caution, especially regarding the arrival of Artificial General Intelligence (AGI). In this post, we examine what AGI is, its potential societal impact, and the concerns these leaders have raised.

What Is Artificial General Intelligence?

Most of today’s AI systems, such as facial recognition or language translation, are examples of Artificial Narrow Intelligence (ANI): they excel at specific tasks but lack broader reasoning abilities.

Artificial General Intelligence (AGI), by contrast, refers to a hypothetical AI capable of understanding, learning, and performing any intellectual task a human can. An AGI would be able to apply knowledge across domains, learn new skills independently, and demonstrate common sense—capabilities that current AI does not possess.

While true AGI doesn’t exist yet, AI is advancing rapidly. Some experts believe it is decades away, while others, like Demis Hassabis, see a a five-to-ten-year timeframe as plausible. Dario Amodei predicts powerful, society-shaping AI systems could emerge as early as 2026 or 2027. Offering a more skeptical view, Yann LeCun argues that human-level AI is further off, asserting that current Large Language Models (LLMs) are not a viable path to AGI and that fundamentally new architectures are needed.

The Double-Edged Sword of AGI

AGI could help solve humanity’s most difficult problems, from breakthroughs in medicine to new approaches for tackling climate change and boosting productivity.

Yet these same capabilities raise serious concerns. AGI could automate a vast range of jobs, leading to widespread unemployment and increased inequality. It could also be misused for disinformation, surveillance, or autonomous weapons. A core challenge is the alignment problem: ensuring AGI reliably follows human values. Some industry insiders warn that a misaligned or uncontrollable superintelligent system could even pose an existential threat.

Global Stakes

AGI’s development will affect the entire world, not just technology hubs. Economically, it could widen the global wealth gap: wealthy nations with strong digital infrastructure, like the U.S., are better positioned to benefit, potentially reducing the competitive advantage of developing countries reliant on lower labor costs.

Geopolitically, the race to develop AGI may accelerate competition between major powers. While this can drive innovation, it may also undermine cooperation on safety standards—something many experts view as critical for managing global risks.

What AI Leaders Are Saying

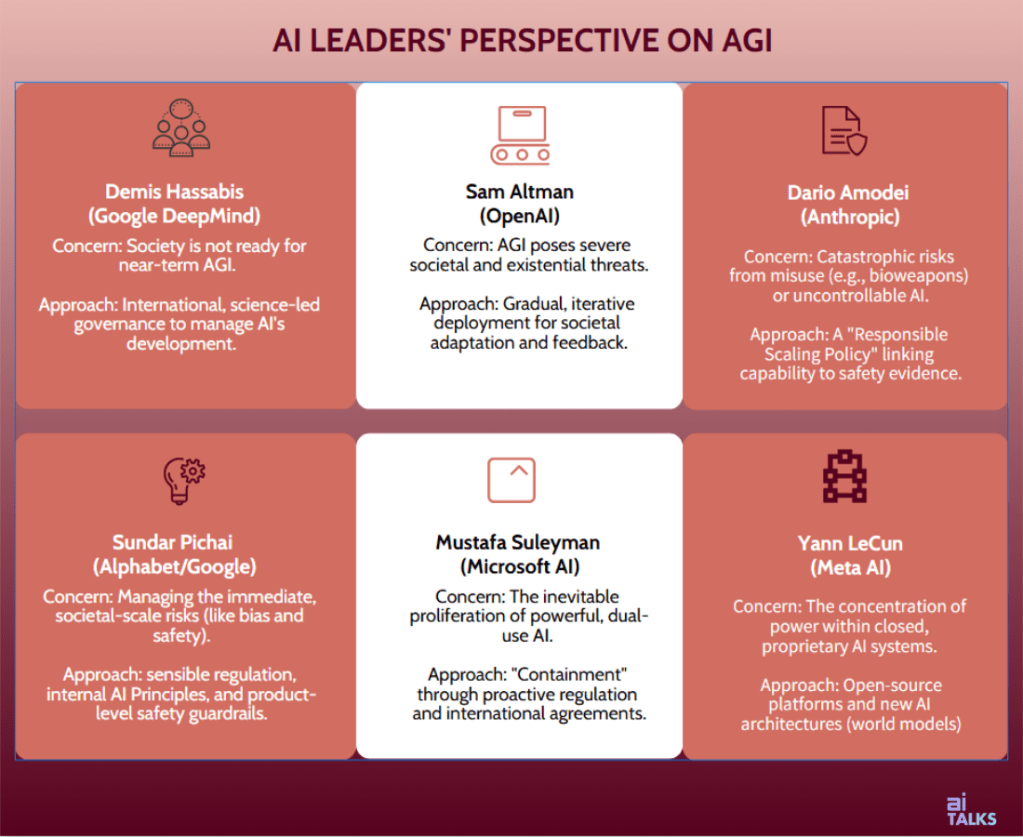

When the architects of today’s most powerful AI models sound the alarm, their warnings become especially noteworthy. Together, their views reveal a spectrum of concern about the technology’s trajectory.

A prominent group—Demis Hassabis (Google DeepMind), Sam Altman (OpenAI), and Dario Amodei (Anthropic)—emphasizes cautionary scaling. They assume the emergence of highly capable, potentially uncontrollable AI is a near- to medium-term inevitability, and focus on building robust frameworks to manage this transition safely:

- Demis Hassabis warns that society is unprepared for the imminent arrival of AGI and advocates for multilayered, international governance. Under his leadership, Google DeepMind has published “An Approach to Technical AGI Safety and Security,” a comprehensive framework proposing concrete protocols and interventions to ensure AGI development remains safe, secure, and aligned with human values.

- Sam Altman champions a strategy of “iterative deployment,” arguing that gradual, real-world rollout allows for continuous safety improvements and adaptation. At OpenAI, the firm he leads, this approach is embodied in the Preparedness Framework, which assesses models for “high” and “critical” capabilities and requires safeguards and internal safety reviews before and during deployment.

- Dario Amodei highlights catastrophic risks from both deliberate misuse—such as the creation of bioweapons—and unpredictable, emergent behaviors. At Anthropic, the company he co-founded, this concern is addressed through a multi-layered safety strategy, including the ” Responsible Scaling Policy,” which mandates that safety evidence must advance in lockstep with a model’s increasing capabilities.

A second perspective focuses on the immediate societal implications and control of today’s and tomorrow’s powerful AI systems:

- Sundar Pichai (Alphabet) advocates for sensible, risk-based regulation tailored to specific sectors, along with organizational incentives and product-level safety guardrails. He recognizes significant risks but maintains that AI, if managed responsibly, will ultimately benefit humanity.

- Mustafa Suleyman (Microsoft AI) calls for urgent “containment” measures, viewing the rapid proliferation of dual-use AI (with both beneficial and harmful impacts) as inevitable. He believes the primary danger lies in human-led destabilization and advocates for proactive international agreements and regulatory frameworks to manage this “coming wave” and prevent chaos.

Standing apart is Yann LeCun (Meta AI), a prominent voice within a camp of influential figures who believe the current path to AGI is flawed. While he is not alone in this view, we focus on his perspective:

- Yann LeCun fundamentally critiques the current path to AGI (or what he prefers to call “Advanced Machine Intelligence”), arguing that today’s LLMs are technically incapable of achieving true intelligence due to architectural limitations—specifically, their lack of reasoning, planning, and real-world understanding. He contends that the most pressing danger is not hypothetical superintelligence, but the concentration of power within closed, proprietary AI systems. LeCun advocates for a paradigm shift toward new, objective-driven AI architectures—especially those based on world models and multimodal learning— and a steadfast commitment to open-source solutions to foster safe, transparent, and democratic innovation.

These perspectives reveal a crucial split—not just in proposed solutions, but in the very diagnosis of the core problem. One camp, including Hassabis, Altman, and Amodei, is focused on the existential risk of a future, uncontrollable superintelligence and is building frameworks to govern its safe arrival. A second camp, represented by Pichai and Suleyman, emphasizes governing the immediate societal risks of today’s powerful AI—such as corporate accountability and global proliferation—while also acknowledging long-term concerns. Standing apart, Yann LeCun emphasizes that the current technical path to AGI is fundamentally flawed, advocating for open-source solutions and the development of new, multimodal AI architectures.

Looking Ahead

Warnings from AI’s leading developers are a clear signal that society must prepare now. While AGI’s arrival date is uncertain, its potential to reshape the world is serious enough to require immediate attention.

This includes investing in AI safety research, developing national and international regulatory frameworks, and addressing the deep ethical questions AGI raises. The architects of today’s AI are urging us to act before AGI changes everything—because by the time it arrives, it may be too late to catch up.

Learn More

Altman, S. (2025, January 5). Reflections. Sam Altman Blog. https://blog.samaltman.com/reflections

Altman, S. (2025, June 10). Gentle singularity. Sam Altman Blog. https://blog.samaltman.com/the-gentle-singularity

Amodei, D. (2024, October). Machines of loving grace. Darioamodei.com. https://www.darioamodei.com/essay/machines-of-loving-grace

Anthropic. (2025, May 14). Responsible Scaling Policy, ver. 2.2. https://www-cdn.anthropic.com/872c653b2d0501d6ab44cf87f43e1dc4853e4d37.pdf

Bostrom, N. (2016). Superintelligence: Paths, dangers, strategies. Oxford University Press.

Google DeepMind. (2025, April 2). Taking a responsible path to AGI. https://deepmind.google/discover/blog/taking-a-responsible-path-to-agi/

Suleyman, M. (2025). The coming wave. Crown.

Also, the Lex Fridman Podcast has featured extensive interviews with many of the industry leaders discussed in this post.

This post was researched and written with the assistance of various AI-based tools.

Leave a comment